Flying car concept by the late Syd Mead, Emerge advisor and friend

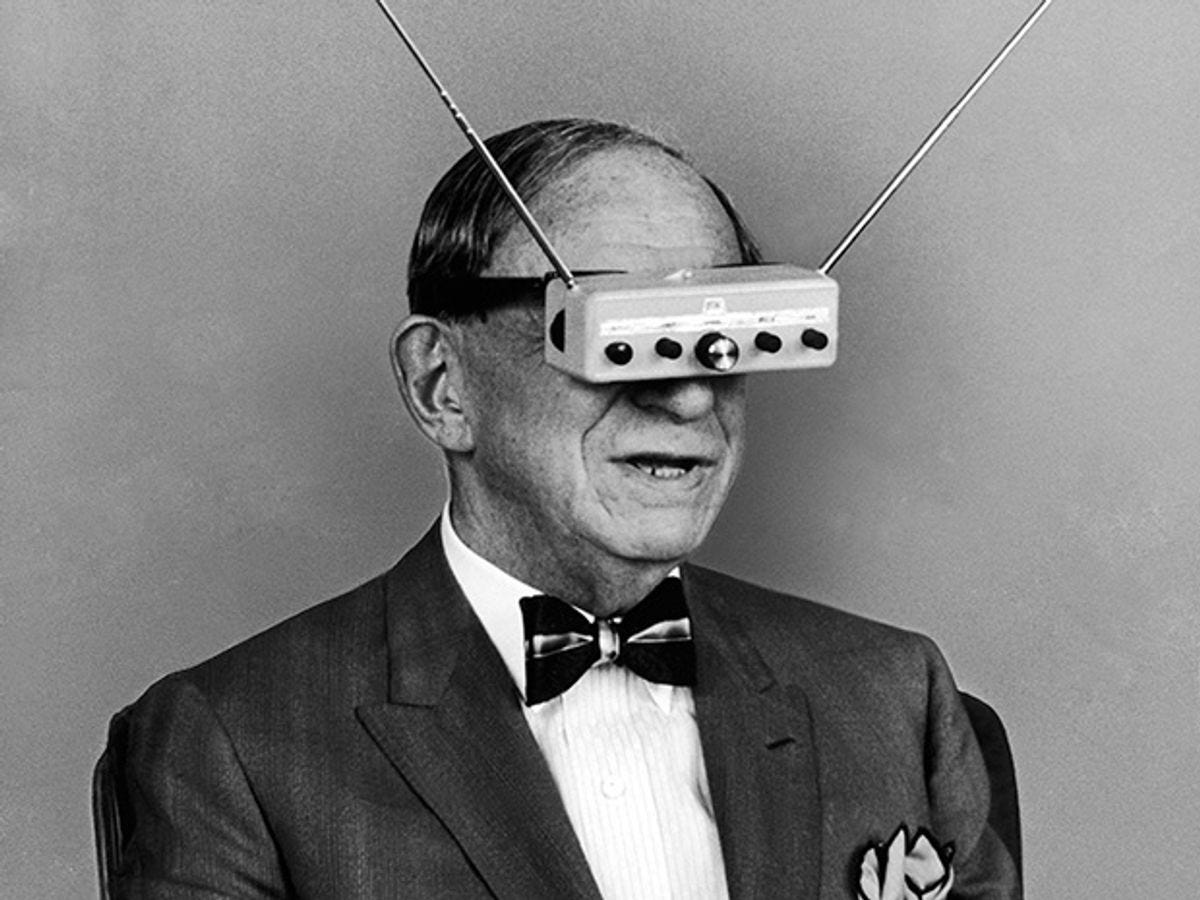

“The first version of a new medium imitates the medium it replaces.” - Marshall McLuhan

I first read this quote in Kevin Kelly’s The Inevitable, which by the way, is an incredible read. This pattern of mimicry is well observed:

first (latest wave of) VR experiences were just video in 3D

first TV shows were people behind a desk talking into a microphone

first car was literally a wooden carriage without a horse

first computer was organized into files and folders like a physical office

We, as a society- at least in Western cultures, tend to discount past learnings and I found myself asking if this is indeed inevitable or possibly avoidable as we look toward and build a new paradigm of human computer interaction, some call the Metaverse.

THE METAVERSE

I’ve been participating in a number of Metaverse panels and councils lately, and I can’t help but wonder what elements of the current computing paradigm (mobile) are we thoughtlessly (and needlessly) mimicking as we build the Metaverse?

Matthew Ball, an advisor, investor, and arguably the leading authority on the matter, has a lot to say on the subject, and I like what he said recently - that “the Metaverse is a pseudo-successor to the current internet” - not entirely replacing it, but providing a substantially new paradigm though which we interact digitally. In fact, the same could be said of other tech mediums in our lives today:

with VR we didn’t (and won’t for a while) stop watching TV and playing console games

with mobile computing we didn’t entirely stop using desktop computing (in fact I’m on my laptop far more hours a day than my mobile)

with desktop computing we didn’t entirely stop making phone calls

with phone calls with didn’t stop sending physical mail

Over long enough time, however, paradigms do fade away- think sending smoke signals or the telegraph . . . or do they just come full circle? (a deep dive in a future post)

To answer my original question- I do think there are a few habits / manifestations of our current “online” reality that should be re-imagined, re-defined, and reconstructed as we build pieces of the Metaverse:

Non-binary: In our current mobile/desktop computing reality, we are either “in it or you ain’t”. It is the nature of the medium itself that calls for 100% focus. I think this will and should change. At Emerge, we’re certainly thinking about how you can flow in and out of various states of reality- digitally and physically. In a visual sense, this is easy to imagine as experiences between VR and AR become blurred with mixed reality headsets that Meta and Apple are shipping soon. But what about the sense of touch? Sci-fi has historically portrayed a constrained view of touch through haptic suits or gloves, which are binary mediums. The future I want to live in will enable far greater nuance- the ability to pick up an apple or my cup of tea, and in an instance also hold the hand of my virtual grandma (A deeper dive into the power of touch in a future post).

New interfaces: As I alluded to, we will finally solve for touch in this computing paradigm (a sought after holy grail for almost 50 years). We will also solve for some limited form of brain-computer interface in this phase. I’ve been surprised how little conversation there has been on this subject. The technological progress needed in the visual and audio category are straightforward. This will happen. However there’s been little mainstream imagination with regard to human perception outside of these 2 senses. My friends and investors know that I really dislike the word “haptics”. Every now and then I feel that a word takes on so much baggage from false starts that a new word must be invented to free the field from linear thinking and unnecessary constraints (a future post on hardware in the metaverse shortly). I think we will see a mass consumer touch solution (I think it is the Emerge Wave-series hardware), and an early version of a consumer BCI (non-invasive) for social use in the Metaverse in this decade.

New types of data: With new interfaces will come with new types of data beyond just 3D visuals and spatial audio. I think we are coming close to the human brain’s maximum throughput of audio and visual data processing. We’re listening to audio books and watching videos at 1.5x, 2x, even 3x speeds! But these 2 channels have ceilings, so to achieve the next level of digital communication will require opening up another channel. Yes we will have brain-to-brain tech and the efficiencies they will enable one day, but I’m interested in the near-ish term/ what is product-izable right now. I’ve talked quite a bit about touch in this new era of computing, but the bigger opportunity that touch unlocks is actually access to emotional data. We technically have this potential today with microfacial data and voice intonation, but on the whole, our ability to interpret and express emotions digitally are infantile. Hell, most of us even struggle to do so IRL. As we gain the tools to more effectively understand others’ and our own emotions, we have the opportunity to increase our ability for empathy, which I think will define this next decade.

Is it inevitable that we spend the next few years building facsimiles for the Metaverse before we build the really good stuff? I fear that on some level we already are (look at the zero-value add of 99.9% NFT projects), but I do know that we need to think non-linearly and explore alternative states of consciousness if we want to build something new.

PS. This is a huge reason I’m so excited about the current renaissance of psychedelics (I’ll post my notes of my Ego Death trip another time).